Many of our clients struggle with ensuring that the solutions they create meet the needs of both their business and their customers. The exact symptoms of the above failure vary between clients, and are best explained using anonymous quotes:

We make an initial release of a solution and we never return to it – it feels like it just doesn’t get ‘done’

We rarely measure the success or failure of the solutions we create. Instead we look at business performance – we either hit our numbers or we didn’t. If we don’t hit the numbers, it’s the fault of engineering and product. If we hit our numbers, sales gets credit

I don’t get it – we get a lot of velocity out of our engineering team, but we always have availability problems

A likely cause

The above quotes all point to very different symptoms of a common problem. One discusses level of investment in the product and bringing it to maturity, one to a lack of value measurement and allocation of recognition, and the last to the availability and quality of the solutions that the team creates. The most common problem for each of these symptoms in our experience is that the Agile “Definition of Done” is undefined, implicitly defined or most commonly incompletely defined.

The purpose of “Done”

“Done” is comprised of a number of criteria to be met before any element of work (e.g. a story) is considered “complete” and can be counted for the purposes of velocity.

The benefits of “Done”

• Defining done removes ambiguity and uncertainty, and forces developers, product owners, and scrum team members to align on standards for completion.

• Helps foster good and efficient discussion around paths, tradeoffs, and completion during standups.

• If employed consistently between teams, helps to align and make useful velocity related metrics.

• Limits rework, or post-sprint work for important elements of the solution in question.

• When combined with velocity, incorporates a notion of “earned value” – incenting teams to completion of a solution rather than the typical “effort expended” accounted for at the actual expense of that effort. Put another way, teams don’t get credit for work until something has been completed.

A typical generic definition of “Done”

A solution is done when it:

• Is implemented to standards

• Has been code reviewed consistent with standards

• Has automated unit tests created to the unit coverage standard (70+%)

• Has passed automated integration testing and all other continuous integration checks

• Has all necessary support and end user documentation complete

• Has been reviewed by the product owner

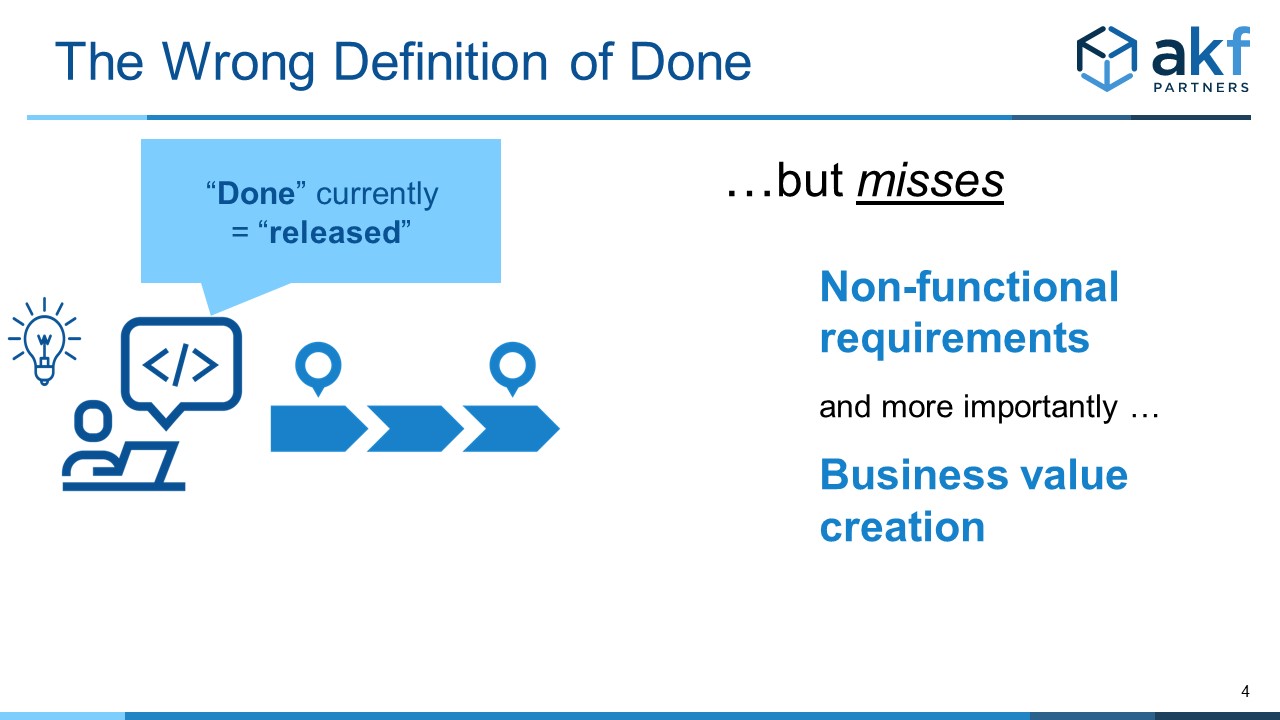

Necessary but Insufficient Definition

The above definition, while necessary, is incomplete and therefore insufficient for the needs of a company. The definition fails to account for:

• Business value creation (is it really “done” if we don’t achieve the desired results”?)

• Non-functional requirements necessary to produce value such as availability, scalability, response time, cost of maintenance (cost of operations or goods sold), etc.

As a result, because metrics tied against value creation are not available, attribution for credit of results becomes a subjective process.

Towards a Better Definition

Given the above, a better definition of done should include:

• Non-functional requirements necessary to achieve value creation

• Evaluation that the solution achieves some desired result that is ideally also incorporated into the stories themselves (some measurement our outcome the effort is to achieve)

Modifying the prior definition, we might now have:

A solution is done when it:

• Is implemented to standards

• Has been code reviewed consistent with standards

• Has automated unit tests created to the unit coverage standard (70+%)

• Has passed automated integration testing and all other continuous integration checks

• Has delivered all necessary support and end user documentation

• Has been reviewed by the product owner

• Meets the response time objective to end users at peak traffic

• Meets one week of availability target and has passed an availability review

• Meets the cost of goods sold target after one week for infrastructure or IaaS costs

• Meets all other company NFRs (above are examples)

• Shows progress towards or achieves the business metrics it was meant to achieve (may be none for a partial release, or full metrics for the completion of an epic)

Who is Responsible for Evaluating “Done”

This is an agile process, so the team is responsible for their own measurement. In most teams this means the PO and Scrum Master. As a business we also “trust and verify”, so leaders should double-check that value metrics have indeed been met in normal operations reviews.

The Cons of the “Right Definition of Done”

The largest impact is to the time one can realize the earned value component of velocity. Here we’ve been careful to say that the bound is one week after delivery such that velocity is just pushed out by a week to allow for evaluation in production. In addition, there is a bit more record keeping (ostensibly for a scrum master and product owner to evaluate) but the cost of that is incredibly low relative to the alignment to business objectives and customer needs.

Keeping the Old Velocity Metric

There is some value in understanding what gets completed as well as what is truly “done”. If this is the case, just track both velocities. Call one “release velocity” and the other “done velocity” or “value velocity”. The overhead is not that high – scrum masters should easily be able to do this. Now you’ll have metrics to help you understand the gap between what you release first time versus when something finally creates value. This gap is as useful for problem identification as “find-fix” charts in evaluating completion of quality assurance checks.

The Biggest Reason for The Right Definition of Done

Hopefully the answer to this is somewhat obvious: By changing the definition of done, we align ourselves to both our customer and business needs. It helps engineers focus on customer outcomes – rather than just how something should “work”. Engineers too often focus on a problem from their perspective forward

rather than from the customer needs backwards.